8.6. Feature Matching¶

8.6.1. Basics of Brute-Force Matcher¶

Brute-Force matcher is simple. It takes the descriptor of one feature in first set and is matched with all other features in second set using some distance calculation. And the closest one is returned.

For BF matcher, first we have to create the BFMatcher object using

cv2.BFMatcher(). It takes two optional params. First one is

normType. It specifies the distance measurement to be used. By

default, it is cv2.NORM_L2. It is good for SIFT, SURF etc

(cv2.NORM_L1 is also there). For binary string based descriptors

like ORB, BRIEF, BRISK etc, cv2.NORM_HAMMING should be used, which

used Hamming distance as measurement. If ORB is using

VTA_K == 3 or 4, cv2.NORM_HAMMING2 should be used.

Second param is boolean variable, crossCheck which is false by

default. If it is true, Matcher returns only those matches with value

(i,j) such that i-th descriptor in set A has j-th descriptor in set B as

the best match and vice-versa. That is, the two features in both sets

should match each other. It provides consistant result, and is a good

alternative to ratio test proposed by D.Lowe in SIFT paper.

Once it is created, two important methods are BFMatcher.match() and BFMatcher.knnMatch(). First one returns the best match. Second method returns \(k\) best matches where k is specified by the user. It may be useful when we need to do additional work on that.

Like we used cv2.drawKeypoints() to draw keypoints, cv2.drawMatches() helps us to draw the matches. It stacks two images horizontally and draw lines from first image to second image showing best matches. There is also cv2.drawMatchesKnn which draws all the k best matches. If k=2, it will draw two match-lines for each keypoint. So we have to pass a mask if we want to selectively draw it.

Let’s see one example for each of SURF and ORB (Both use different distance measurements).

8.6.2. Brute-Force Matching with ORB Descriptors¶

Here, we will see a simple example on how to match features between two

images. In this case, I have a queryImage and a trainImage. We will try

to find the queryImage in trainImage using feature matching. ( The

images are /samples/c/box.png and /samples/c/box_in_scene.png)

We are using SIFT descriptors to match features. So let’s start with loading images, finding descriptors etc.

>>> import numpy as np

>>> import cv2 as cv

>>> import matplotlib.pyplot as plt

>>> img1 = cv.imread('/cvdata/box.png',cv.IMREAD_GRAYSCALE) # queryImage

>>> img2 = cv.imread('/cvdata/box_in_scene.png',cv.IMREAD_GRAYSCALE) # trainImage

>>> # Initiate ORB detector

>>> orb = cv.ORB_create()

>>> # find the keypoints and descriptors with ORB

>>> kp1, des1 = orb.detectAndCompute(img1,None)

>>> kp2, des2 = orb.detectAndCompute(img2,None)

Next we create a BFMatcher object with distance measurement

cv2.NORM_HAMMING (since we are using ORB) and crossCheck is

switched on for better results. Then we use Matcher.match() method to

get the best matches in two images. We sort them in ascending order of

their distances so that best matches (with low distance) come to front.

Then we draw only first 10 matches (Just for sake of visibility. You can

increase it as you like)

>>> # create BFMatcher object

>>> bf = cv.BFMatcher(cv.NORM_HAMMING, crossCheck=True)

>>> # Match descriptors.

>>> matches = bf.match(des1,des2)

>>> # Sort them in the order of their distance.

>>> matches = sorted(matches, key = lambda x:x.distance)

>>> # Draw first 10 matches.

>>> img3 = cv.drawMatches(img1,kp1,img2,kp2,matches[:10],None,flags=cv.DrawMatchesFlags_NOT_DRAW_SINGLE_POINTS)

>>> plt.imshow(img3),plt.show()

(<matplotlib.image.AxesImage at 0x7f4c01ab2210>, None)

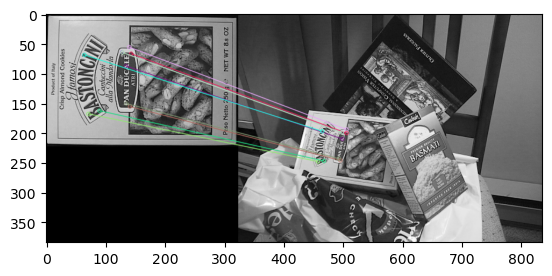

Below is the result I got:

What is this Matcher Object?¶

The result of matches = bf.match(des1,des2) line is a list of DMatch

objects. This DMatch object has following attributes:

DMatch.distance- Distance between descriptors. The lower, the better it is.

DMatch.trainIdx- Index of the descriptor in train descriptors

DMatch.queryIdx- Index of the descriptor in query descriptors

DMatch.imgIdx- Index of the train image.